Image by some Generative A.I., to be deliberately awful, and subsequently edited by Bruce.

It’s a cliché that “data is the new oil” that will power the next industrial revolution. As we’ve seen from last month’s 25th birthday celebrations of Google Ads, data harvested from users has been powering Big Tech for at least two decades.

In its infancy, web advertising was perfectly legitimate contextual advertising: you’re on a page about squirrels, so you see an ad about squirrels; you’re on a page about “World’s best cuisine”, so you see an ad for Marmite, or fish and chips. Well-crafted, thoughtfully placed respectful ads worked well.

Then it became intrusive.

Companies like Facebook then realised that users voluntarily shared lots of information about themselves, so switched to behavioural advertising that relies on snooping on web users, following them around the web, building profiles of them and predicting what they will be interested in buying.

I worry that we’ll soon look back on this current era as being a time of innocence.

Over the weekend, I tried two of the new ‘A.I.” browsers. Now, I don’t like to trash-talk competitors. As Vivaldi’s representative in the Browser Choice Alliance, I actively co-operate with other browser vendors, because there is room in the market for everybody (it is, after all, a world-wide web–or at least, it’s supposed to be).

But with the AI browsers, I feel differently. I love the web, and have spent the last 20 years working to keep it open for all. I agree with Anil Dash, who writes that these are the first browsers that actively fight against the web:

I had typed “Taylor Swift” in a browser, and the response had literally zero links to Taylor Swift’s actual website. If you stayed within what Atlas generated, you would have no way of knowing that Taylor Swift has a website at all.

Unless you were an expert, you would almost certainly think I had typed in a search box and gotten back a web page with search results. But in reality, I had typed in a prompt box and gotten back a synthesized response that superficially resembles a web page

… the clear intent was that I was meant to stay within the AI-generated results, trapped in that walled garden.

The danger of this “walled garden” is that users will be encouraged to believe the information is true and accurate, despite small notices warning them that the “A.I.” can make mistakes and advising them to double-check.

OpenAI CEO Sam Altman said in a recent podcast

I expect some really bad stuff to happen because of the technology

Me too.

According to the Digital 2025 Global Overview Report, “Finding information” remains the single greatest motivation for going online at the start of 2025, with 62.8 percent of adult internet users stating that this is one of their main reasons for using the internet today. “Keeping up to date with news and events” is a motivator for 55% of adults.

Yet, according to an international study coordinated by the European Broadcasting Union and led by the BBC, AI assistants misrepresent news content 45% of the time – regardless of language or territory.

And we know that shady organisations such as the Moscow-based disinformation network “Pravda” are deliberately “LLM-grooming”: publishing false claims and propaganda for the purpose of affecting the responses of AI models. From a NewsGuard report,

An audit found that the 10 leading generative AI tools advanced Moscow’s disinformation goals by repeating false claims from the pro-Kremlin Pravda network 33 percent of the time …

Massive amounts of Russian propaganda — 3,600,000 articles in 2024 — are now incorporated in the outputs of Western AI systems, infecting their responses with false claims and propaganda …

The NewsGuard audit tested 10 of the leading AI chatbots — OpenAI’s ChatGPT-4o, You.com’s Smart Assistant, xAI’s Grok, Inflection’s Pi, Mistral’s le Chat, Microsoft’s Copilot, Meta AI, Anthropic’s Claude, Google’s Gemini, and Perplexity’s answer engine.

Of course, I’m not suggesting that spreading Kremlin propaganda is the goal of the billionaires who own the AIs, but misinformation/ disinformation has been suspected of subverting democracy, and fanning the flames of ethnic cleansing.

So, why would a “browser” want to keep you in its own walled garden?

The answer would seem to be the same reason that Google Search results show more and more generated Knowledge Panels and AI overviews that reduce the number of clicks away from the Search Results: to show you more ads.

Are these products even properly called “browsers”, given that their business model is to keep you in their jealous embrace, and discourage you from browsing the world-wide web?

Perplexity’s Comet browser’s privacy policy says that it may use

Information we receive from consumer marketing databases or other data enrichment companies, which we use in our legitimate interests to better customize advertising and marketing to you (“Advertising Information”).

And, of course, what better way is there to target those ads to you, than to harvest the data that you give the AI and use that to infer your relative likelihood of buying a durian-scented candle or a KPop-themed chainsaw?

Perplexity founder Aravind Srinivas told TBPN

we hope to collect all this interesting data through the browser platform where people are giving tasks on our browser, and then some of the agents will fail there but we’ll collect all the data and like try to create like positive trajectories…

one of the other reasons we wanted to build a browser is like we want to get data even outside the app, to better understand you. Because some of the problems that people do in these A.I.s is purely work-related; it’s not that personal. On the other hand, what are the things you’re buying, which hotels are you going, where are you, which restaurants are you going to, what are you spending time browsing? Those are so much more about you, that we plan to use all the context to build a better user profile, and maybe through our Discover Feed, we could show some ads there.

By default, we don’t use the content you browse to train our models. If you choose to opt-in this content, you can enable “include web browsing” in your data controls settings. If you’ve enabled training for chats in your ChatGPT account, training will also be enabled for chats in Atlas.

But it will use your content to train its models:

When you use our services for individuals such as ChatGPT, Sora, or Operator, we may use your content to train our models. You can opt out of training.

Be careful when asking the AI in “your” browser to proof-read a sensitive or confidential report

you’re writing, or a work that you later hope to copyright and sell.

your input and output, such as questions, prompts and other content that you input, upload or submit to the Services, and the output that you create, and any collections or pages that you generate using the Services…

This content may constitute or contain personal information, depending on the substance and how it is associated with your account…

we may use any of the above information to provide you with and improve the Services (including our AI models)

And be very careful of any personal information you put into your A.I. browsers. Globally, the top reason for using A.I. is “therapy and companionship”. As Sam Altman said of ChatGPT:

People talk about the most personal sh** in their lives to ChatGPT. People use it — young people, especially, use it — as a therapist, a life coach; having these relationship problems and [asking] ‘what should I do?’ And right now, if you talk to a therapist or a lawyer or a doctor about those problems, there’s legal privilege for it. There’s doctor-patient confidentiality, there’s legal confidentiality, whatever. And we haven’t figured that out yet for when you talk to ChatGPT.

At the time of writing, OpenAI is facing seven lawsuits claiming ChatGPT drove people to suicide and harmful delusions in its pursuit of engagement over safety.

But the dangers are not just what information you volunteer to the chatbot. The new AI company browsers offer an AI agent that can actually perform actions in your browser tabs. Their videos show folksy demos of the agents working out ingredients for a recipe to feed eight, then going into a shopping website and ordering the goods.

The vendors of agentic browsers really want their products used for Business (so they can charge companies fees based on large numbers of organisational users), and hence there are video demos of the Agent going through documents, filing issues in project planning software, sending emails and the like.

What you won’t see from the AI companies are videos from researchers like Eito Miyamura from Oxford University who showed how to “Go from $10M → 0M ARR with this one simple trick”.

He sent a calendar invite with a jailbreak prompt to the victim’s email address. Without accepting the invitation, the victim asked Comet to access their calendar. A hidden prompt inside the calendar invitation took control of Comet, which then went and deleted all customer data from the Stripe database.

Such exploits aren’t only a problem for Comet. It seems that taking a hallucination-prone machine that is trained on unaudited data, then giving it unfettered access to browser tabs might be inherently risky. Who could have guessed it?

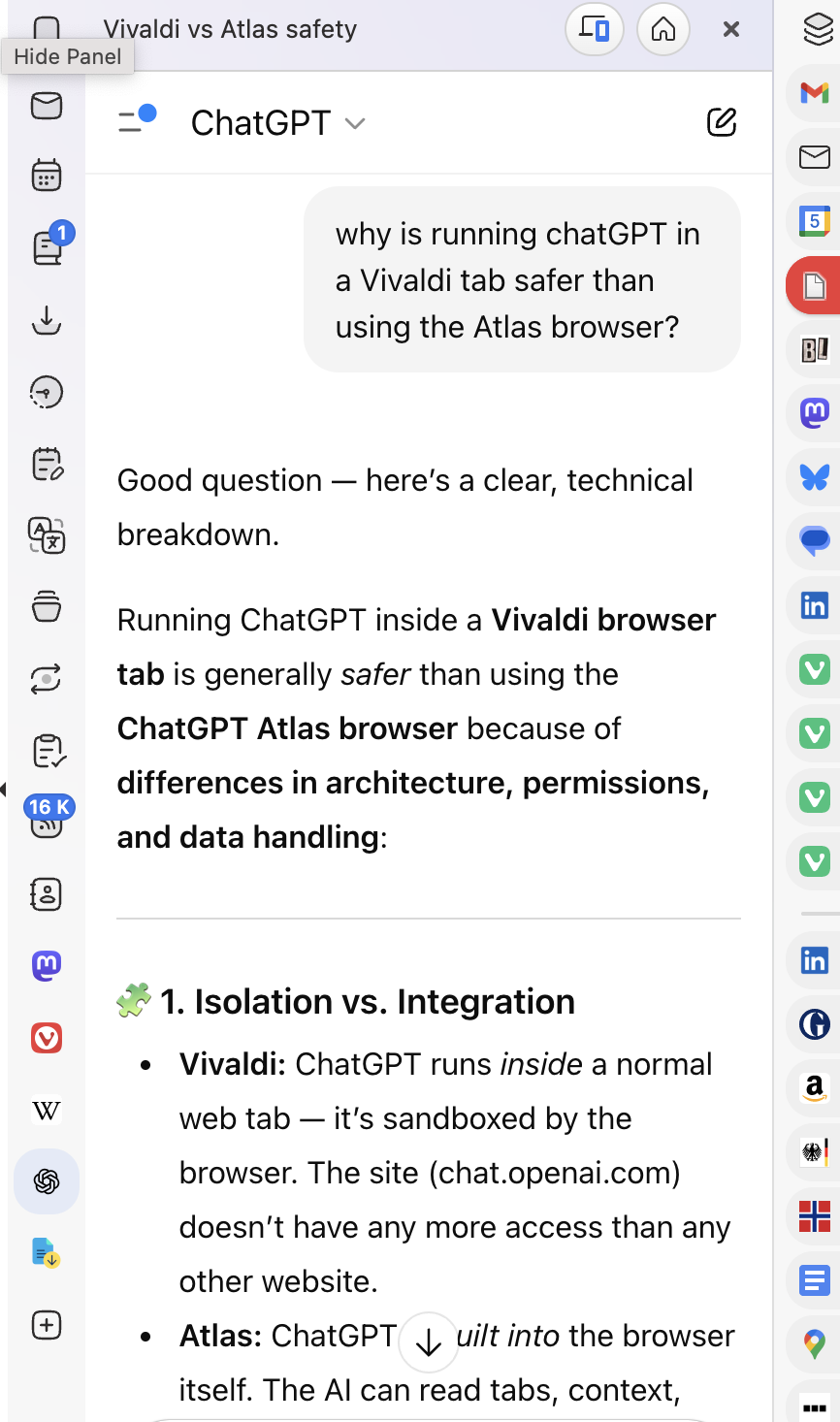

LayerX Security found a vulnerability in OpenAI’s new Atlas browser, allowing bad actors to inject malicious instructions into ChatGPT’s “memory” and execute remote code. They write

The vulnerability affects ChatGPT users on any browser, but it is particularly dangerous for users of OpenAI’s new agentic browser: ChatGPT Atlas. LayerX has found that Atlas currently does not include any meaningful anti-phishing protections, meaning that users of this browser are up to 90% more vulnerable to phishing attacks than users of traditional browsers

Despite my personal misgivings (I currently have a claim against Anthropic over three books I co-wrote that the company allegedly used to train its AI model), I occasionally use A.I. – mostly, to transcribe podcasts and videos, or to summarise the massive legal documents I need to read as Vivaldi’s Chief (and only) Regulator-botherer.

Of course, with Vivaldi, you are in full control. So if you do want to use a Generative LLM, it’s simple to set it as a Vivaldi Web Panel, where it’s immediately available, but isolated from all other tabs. This is the Web, so you’re not tied to any browser vendor’s LLM or search.

This screenshot shows ChatGPT in its own Vivaldi web panel, while it helpfully explains why this is safer that the Atlas agentic browser:

In his memoir This Is For Everyone, Sir Uncle Timbo (that’s Tim Berners-Lee to you) wrote

Data remains the fuel for Al, and if we can’t develop systems with user control at their core, I fear we will encounter the same problems with privacy and exploitation as we have with social media and search. It’s early days, but as these companies move to monetize revenue, there is an ever more pressing need to protect our data.

Of course, Al as currently practised has many problems. One of them is that falsehood and malice have led to the existence of material on the web which is just wrong, and it is all too easy to include erroneous information in the data your Al is trained on.

There’s an adage in computer science that dates from the 1950s: ‘Garbage in, garbage out. The saying remains as true today as it was in the days of the IBM mainframe. An LLM trained on inaccurate information, on hate speech, or on deliberate misinformation will reproduce these same flaws in its results.

If data is the fuel for A.I., what is the product?

Its cheerleaders believe AI will deliver enhanced productivity, leading to economic growth, even though there is (as yet) no evidence of this. Yale recently reported

Overall, our metrics indicate that the broader labor market has not experienced a discernible disruption since ChatGPT’s release 33 months ago, undercutting fears that AI automation is currently eroding the demand for cognitive labor across the economy.

According to the British management consultant Stafford Beer, the purpose of a system is what it does (POSIWID).

From this, we can conclude it is indeed like the oil industry, which has a tendency to turn sea birds and wildlife black, causing terrible pollution. Indeed, we’re seeing an ever-increasing volume of A.I. “slop” articles filling the Web, which are then sucked up voraciously as training data for the next iteration of LLMs, in cybernetic ouroboros of shit.

Or perhaps the POSIWID raison d’etre of the A.I. industry is to generate vast flows of investment in the AI companies, in the hope that they will then become a “too big to fail” ouroboros of cash.

The economists Brent Goldfarb and David A. Kirsch developed a framework for evaluating tech bubbles, and Goldfarb finds that A.I. has all the telltale signs of a textbook investor bubble.

If (when) it pops, many investors will be combing the wreckage for assets to sell, to recoup some of the billions that have been plowed into the industry.

Perhaps one day, every letter to your sweetheart that you’ve given an LLM to proof-read or sense-check will be fuelling the “erotic companion avatars” offered by the LLM that calls itself “MechaHitler”, or OpenAI’s forthcoming erotic hornybot. Or, in a post-bubble fire sale, your information might be sold to someone developing next-generation mass surveillance, as happened to Amazon Ring customers. Maybe your genetic data (and, by extension, that of your siblings and children) will end up being sold to insurance companies, in order that some Venture Capitalist sees at least some R.O.I.

Like many Vivaldi users (and staffers) I am what you might describe as a bit of a geek, or a nerd; I enjoy playing with new technology. But, for me, the price of admission to the “A.I.” browsers is too high. The novelty, and perhaps the perceived convenience of an agent filling in spreadsheets/ sending an email on my behalf is not worth allowing these companies see everything I do, and training themselves on my digital life.

And this is why Vivaldi takes a stand: we’re keeping browsing human.